Ship the airplane: The cultural, organizational and technical reasons why Boeing cannot recover

Comment on this (at bottom of page)

In 2019, two brand-new Boeing 737 MAX aircraft crashed within half a year of one another, killing all aboard both aircraft. Because of the very few numbers of flights of the new model, those two crashes gave the 737 MAX a fatal crash rate of 3.08 fatal crashes per million flights.

To put that number in perspective, the rest of the 737 family has a rate of 0.23 and the 737’s main competitor, the A320 family, has a rate of 0.08. The A320neo, the latest version of the A320 and the airplane against which the 737 MAX was designed to compete, has a rate of 0.0 (zero). (airsafe.com)

Both crashes were traced to a software system unique to the airplane, called the Maneuvering Characteristics Augmentation System, or MCAS. The 737 MAX used new and larger engines placed further forward of the wing (for ground clearance). And those engines created an aerodynamic instability that MCAS was intended to correct.

Soon after the crashes Boeing revealed that MCAS relied on something called an “angle of attack” (AOA) sensor to tell the software if that instability needed to be corrected. Angle of attack sensors are relatively unreliable devices on the outside of the airplane.

Because of this unreliability, it is common to mount several AOA sensors on the aircraft. For example: the A320neo has three. While the 737 MAX has two AOA sensors, the MCAS software used only one of them at a time. Subsequently, the MCAS software was triggered by bad data from the single AOA sensor.

This then rendered the aircraft uncontrollable with the loss of 346 lives.

To put that number in perspective, the rest of the 737 family has a rate of 0.23 and the 737’s main competitor, the A320 family, has a rate of 0.08. The A320neo, the latest version of the A320 and the airplane against which the 737 MAX was designed to compete, has a rate of 0.0 (zero). (airsafe.com)

Both crashes were traced to a software system unique to the airplane, called the Maneuvering Characteristics Augmentation System, or MCAS. The 737 MAX used new and larger engines placed further forward of the wing (for ground clearance). And those engines created an aerodynamic instability that MCAS was intended to correct.

Soon after the crashes Boeing revealed that MCAS relied on something called an “angle of attack” (AOA) sensor to tell the software if that instability needed to be corrected. Angle of attack sensors are relatively unreliable devices on the outside of the airplane.

Because of this unreliability, it is common to mount several AOA sensors on the aircraft. For example: the A320neo has three. While the 737 MAX has two AOA sensors, the MCAS software used only one of them at a time. Subsequently, the MCAS software was triggered by bad data from the single AOA sensor.

This then rendered the aircraft uncontrollable with the loss of 346 lives.

The fix is in

Boeing proposed a number of fixes to the problem. The most important: having MCAS use both of the 737 MAX’s sensors, instead of just one. If the two sensors disagreed, MCAS would not trigger.

Using two sensors, instead of just one, is such an obvious improvement that it is difficult to understand why they did not just do so in the first place. Nevertheless, Boeing pledged to change the software, to use both, just over half a year ago.

It still has yet to demonstrate a viable fix.

Boeing’s inability to demonstrate a fix for its troubled MCAS system is a demonstration of just how deep the problem is. It illustrates how desperate Boeing is to keep alive a software solution to the aircraft’s instability issues.

Most chillingly, it illustrates just how inadequate such a solution is to the issue.

Most sadly, it is a symbol of the collapse of institutions in the United States. We were once considered the world’s gold standard in everything from education to manufacturing to effective and productive public-sector regulation. That is all going down the drain, flushed by a belief in things that just are not true.

Trying to make sense of it

Since I first learned of the nature of MCAS and its deficiencies, I’ve struggled to come up with a theory of why? How could something as manifestly deadly, and incompetent, as MCAS ever see the light of day within a company like Boeing?

MCAS is dumb as a bag of hammers, as incomplete as Beethoven’s 10th symphony, and as deadly as an abattoir. Its risk to the company was total. How, then, did it ever see the light of day?

I believe the answer lies in the nature of leadership of the Boeing organization. And the effect on the company’s culture that leadership has.

Charles Pezeshki, has a theory of empathy in the organization. When I use the term “empathy” in this article, it is Pezeshki’s term and not the more general vernacular understanding. Specifically, my understanding of empathy in this context is a sense of trust between all individuals in an organization that arises from transparency. That transparency, in turn, enables an understanding of both shared success as well as shared risk.

What that means to an organization like Boeing is:

And what it means within an organization is that if there is an erosion of empathy, costs go up.

Empathy is important not only within an organization but also between organizations. When empathy is destroyed between organizations, such as has happened between Boeing and its subcontractors and suppliers, there is a quantifiable cost that can be attributed. While this cost is similar in concept to the notion of corporate goodwill, it is not the same.

Another calculus provides us with a way to understand that cost. Let’s say a subcontractor, such as Spirit Aerosystems, supplies Boeing with finished 737 fuselages at an agreed-upon price of $10 million dollars per fuselage. But that is the price that Boeing pays only if there is total empathy, total trust, between the two companies.

If the empathy relationship has eroded, however, Boeing’s actual price goes up. If Spirit does not trust that Boeing will not break contracts between them in the future, Spirit will start making contingency plans – such as making fuselages for Boeing’s competitor, Airbus.

Like a suspicious spouse, they will begin to shift resources away from Boeing. They’ll start to “look around” for another, more faithful, partner. They flirt with Airbus and begin to retool their factories internally in the hopes of attracting that new partner. Their machinery will start to make each 737 fuselage a little less well for Boeing as the tools become less precise for Boeing and more precise for Airbus.

Their workers, likewise, will shift their future attention from the company that they perceive as yesterday’s news and towards the company with which they hope to form a better relationship. And that will affect the quality of the work that Spirit does for Boeing (down) and Airbus (up).

That also costs money. And that cost is reflected in what Boeing will need to do to re-work defective fuselages from Spirit and in its future negotiations with Spirit.

Redundancy

In aviation, redundancy is everything. One reason is to guard against failure, such as the second engine on a twin-engine airplane. If one fails, the other is there to bring the plane down to an uneventful landing.

Less obvious than outright failure is the utility of redundancy in conflict resolution. A favorite expression of mine is: “A person with one watch always knows what time it is. A person with two watches is never sure.” Meaning if there’s only one source of truth, the truth is known. If there are two sources of truth and they disagree about that truth there is only uncertainty and chaos.

The straightforward solution to that is triple or more redundancy. With three watches it is easy to vote the wrong watch out. With five, even more so. This engineering principle derives from larger social truths and is embedded in institutions from jury pools to straw polls.

Physiologically, human beings cannot tell which way is up and which way is down unless they can see the horizon. The human inner ear, our first source of such information, cannot differentiate gravity from acceleration. The ear fails in its duty whenever the human to which it is attached is inside a moving vehicle, such as an airplane.

Then only reliable indication of where up and down reside is the horizon. Pilots flying planes can easily keep the plane level so long as they can see the ground outside. Once they cannot, such as when the plane is in a cloud, they must resort to using technology to “keep the greasy side down” (the greasy side being the underside of any airplane).

That technology is known as an “artificial horizon.” In the early days, pilots synthesized the information from multiple instruments into a mental artificial horizon. Later a device was developed that presented the artificial horizon in a single instrument, greatly reducing a pilot’s mental workload.

But that device, as is everything in an airplane, was prone to failure. Pilots were taught to continue to use other instruments to cross-check the validity of the artificial horizon. Or, if the pocketbook allowed, to install multiple artificial horizons in the aircraft.

What is important is that the artificial horizon information was so critical to safety that there was never a single point of reference nor even two. There were always multiples so that there was always sufficient information for the pilot to discern the truth from multiple sources -- some of which could be lying.

Information Takers and Information Givers

The machinery in an aircraft can be roughly divided into two classes: Information takers and information givers. The first class is that machinery that manages the aircraft’s energy, such as the engines or the control surfaces.

They are the aircraft’s machine working class.

The second class of machinery are the information givers. The information givers are responsible for reporting everything from the benign (are the bathrooms in use?) to the critical (what is our altitude? where is the horizon?).

They are the aircraft’s machine eyes and ears.

Redundancy done right (for its time)

All of the ideas and technology embodied in the Boeing 737 were laid down in the 1960s. This ran from what kind of engines, to pressurization, to the approach to the needs of redundancy.

And the redundancy approach was simple: two of everything.

Laying that redundancy out in the cockpit became straight-forward. One set of information-givers, such as airspeed, altitude, horizon on the pilot’s side.

And another set of identical information-givers on the co-pilot’s side. That way any failure on one side could be resolved by the pilots, together, agreeing that the other side was the side to watch.

Origin of consciousness in the bicameral mind

Visualize, if you will, the cockpit of a Boeing 737 as a human brain. There is a left (pilot) side, full of instrumentation (information givers, sensors such as airspeed and angle of attack), a couple pilots and an autopilot.

Boeing proposed a number of fixes to the problem. The most important: having MCAS use both of the 737 MAX’s sensors, instead of just one. If the two sensors disagreed, MCAS would not trigger.

Using two sensors, instead of just one, is such an obvious improvement that it is difficult to understand why they did not just do so in the first place. Nevertheless, Boeing pledged to change the software, to use both, just over half a year ago.

It still has yet to demonstrate a viable fix.

Boeing’s inability to demonstrate a fix for its troubled MCAS system is a demonstration of just how deep the problem is. It illustrates how desperate Boeing is to keep alive a software solution to the aircraft’s instability issues.

Most chillingly, it illustrates just how inadequate such a solution is to the issue.

Most sadly, it is a symbol of the collapse of institutions in the United States. We were once considered the world’s gold standard in everything from education to manufacturing to effective and productive public-sector regulation. That is all going down the drain, flushed by a belief in things that just are not true.

Trying to make sense of it

Since I first learned of the nature of MCAS and its deficiencies, I’ve struggled to come up with a theory of why? How could something as manifestly deadly, and incompetent, as MCAS ever see the light of day within a company like Boeing?

MCAS is dumb as a bag of hammers, as incomplete as Beethoven’s 10th symphony, and as deadly as an abattoir. Its risk to the company was total. How, then, did it ever see the light of day?

I believe the answer lies in the nature of leadership of the Boeing organization. And the effect on the company’s culture that leadership has.

Charles Pezeshki, has a theory of empathy in the organization. When I use the term “empathy” in this article, it is Pezeshki’s term and not the more general vernacular understanding. Specifically, my understanding of empathy in this context is a sense of trust between all individuals in an organization that arises from transparency. That transparency, in turn, enables an understanding of both shared success as well as shared risk.

What that means to an organization like Boeing is:

- Empathy rules relationships

- Safety is the foundation of empathy

- Empathy catalyzes synergy

- Empathy handles complexity well

- Empathy governs tool (and process) selection

- Different empathy levels are tied to different values

And what it means within an organization is that if there is an erosion of empathy, costs go up.

Empathy is important not only within an organization but also between organizations. When empathy is destroyed between organizations, such as has happened between Boeing and its subcontractors and suppliers, there is a quantifiable cost that can be attributed. While this cost is similar in concept to the notion of corporate goodwill, it is not the same.

Another calculus provides us with a way to understand that cost. Let’s say a subcontractor, such as Spirit Aerosystems, supplies Boeing with finished 737 fuselages at an agreed-upon price of $10 million dollars per fuselage. But that is the price that Boeing pays only if there is total empathy, total trust, between the two companies.

If the empathy relationship has eroded, however, Boeing’s actual price goes up. If Spirit does not trust that Boeing will not break contracts between them in the future, Spirit will start making contingency plans – such as making fuselages for Boeing’s competitor, Airbus.

Like a suspicious spouse, they will begin to shift resources away from Boeing. They’ll start to “look around” for another, more faithful, partner. They flirt with Airbus and begin to retool their factories internally in the hopes of attracting that new partner. Their machinery will start to make each 737 fuselage a little less well for Boeing as the tools become less precise for Boeing and more precise for Airbus.

Their workers, likewise, will shift their future attention from the company that they perceive as yesterday’s news and towards the company with which they hope to form a better relationship. And that will affect the quality of the work that Spirit does for Boeing (down) and Airbus (up).

That also costs money. And that cost is reflected in what Boeing will need to do to re-work defective fuselages from Spirit and in its future negotiations with Spirit.

Redundancy

In aviation, redundancy is everything. One reason is to guard against failure, such as the second engine on a twin-engine airplane. If one fails, the other is there to bring the plane down to an uneventful landing.

Less obvious than outright failure is the utility of redundancy in conflict resolution. A favorite expression of mine is: “A person with one watch always knows what time it is. A person with two watches is never sure.” Meaning if there’s only one source of truth, the truth is known. If there are two sources of truth and they disagree about that truth there is only uncertainty and chaos.

The straightforward solution to that is triple or more redundancy. With three watches it is easy to vote the wrong watch out. With five, even more so. This engineering principle derives from larger social truths and is embedded in institutions from jury pools to straw polls.

Physiologically, human beings cannot tell which way is up and which way is down unless they can see the horizon. The human inner ear, our first source of such information, cannot differentiate gravity from acceleration. The ear fails in its duty whenever the human to which it is attached is inside a moving vehicle, such as an airplane.

Then only reliable indication of where up and down reside is the horizon. Pilots flying planes can easily keep the plane level so long as they can see the ground outside. Once they cannot, such as when the plane is in a cloud, they must resort to using technology to “keep the greasy side down” (the greasy side being the underside of any airplane).

That technology is known as an “artificial horizon.” In the early days, pilots synthesized the information from multiple instruments into a mental artificial horizon. Later a device was developed that presented the artificial horizon in a single instrument, greatly reducing a pilot’s mental workload.

But that device, as is everything in an airplane, was prone to failure. Pilots were taught to continue to use other instruments to cross-check the validity of the artificial horizon. Or, if the pocketbook allowed, to install multiple artificial horizons in the aircraft.

What is important is that the artificial horizon information was so critical to safety that there was never a single point of reference nor even two. There were always multiples so that there was always sufficient information for the pilot to discern the truth from multiple sources -- some of which could be lying.

Information Takers and Information Givers

The machinery in an aircraft can be roughly divided into two classes: Information takers and information givers. The first class is that machinery that manages the aircraft’s energy, such as the engines or the control surfaces.

They are the aircraft’s machine working class.

The second class of machinery are the information givers. The information givers are responsible for reporting everything from the benign (are the bathrooms in use?) to the critical (what is our altitude? where is the horizon?).

They are the aircraft’s machine eyes and ears.

Redundancy done right (for its time)

All of the ideas and technology embodied in the Boeing 737 were laid down in the 1960s. This ran from what kind of engines, to pressurization, to the approach to the needs of redundancy.

And the redundancy approach was simple: two of everything.

Laying that redundancy out in the cockpit became straight-forward. One set of information-givers, such as airspeed, altitude, horizon on the pilot’s side.

And another set of identical information-givers on the co-pilot’s side. That way any failure on one side could be resolved by the pilots, together, agreeing that the other side was the side to watch.

Origin of consciousness in the bicameral mind

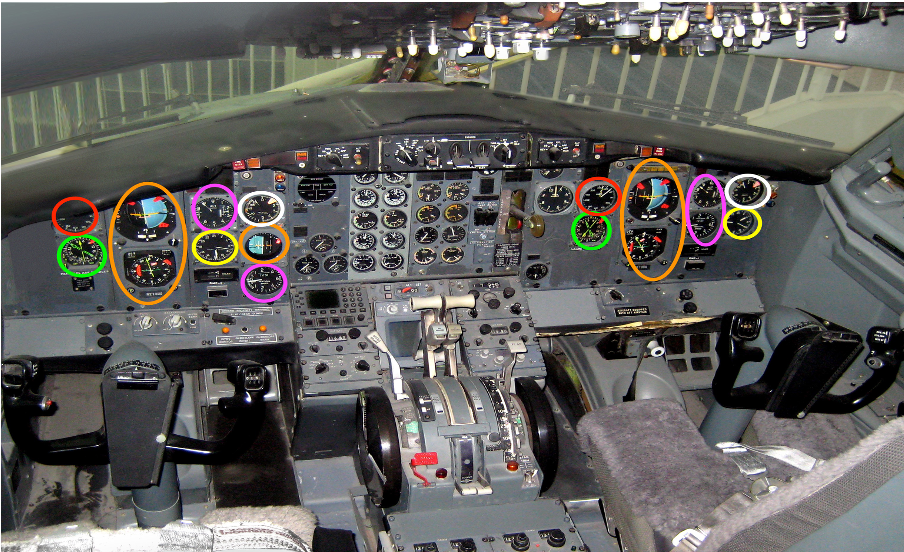

Visualize, if you will, the cockpit of a Boeing 737 as a human brain. There is a left (pilot) side, full of instrumentation (information givers, sensors such as airspeed and angle of attack), a couple pilots and an autopilot.

And there is a right (co-pilot) side, with the exact same things. In the picture above items encircled by same-colored ovals are duplicates of one another. For instance, airspeed and vertical speed are denoted by purple ovals. The purple ovals on the left (pilot’s) side get their information from sensors mounted on the outside of the plane, on the left side. The ones on the right, well the right side.

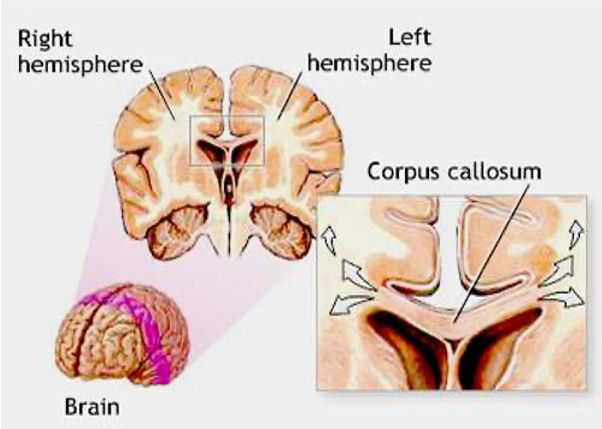

And, like a human brain, there is a corpus callosum connecting those two sides. That connection, however, is limited to the verbal and other communication that the human pilots make between themselves.

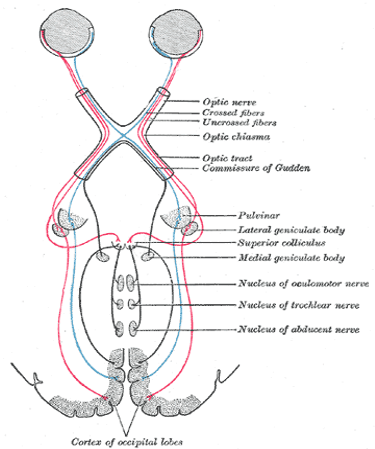

In the human brain, the right brain can process the information coming from the left-eyeball. The left brain can process the information coming from the right-eyeball.

In the 737, however, the machinery on the co-pilot’s side is not privy to the information coming from the pilot’s side (and vice-versa). The machines on one side are alienated from the machines on the other side.

In the 737 it is up to the humans to intermediate. The human pilots are the 737’s corpus callosum.

The 737 autopilot origin story

The 737 needed an autopilot, of course, and its development was straight forward.

Autopilots in those days were crude and simple electromechanical devices, full of hydraulic lines, electric relays and rudimentary analog integration engines. They did little more than keep the wings level, hold altitude and track a particular course.

Obtaining the necessary redundancy in the autopilot system was as simple as having two of them. One on the pilot’s side, one on the co-pilot’s side. The autopilot on the pilot’s side would get its information from the same information-givers giving the pilot herself information.

The autopilot on the co-pilot’s side would get its information from the same information-givers giving the co-pilot his information.

And only one auto-pilot would function at a time. When flying on auto-pilot, it was either the pilot’s or the co-pilots auto-pilot that was enabled. Never both.

And in those simple, straightforward, days of Camelot, it worked remarkably well.

In the picture above you can see the column labelled “A/P Engage.” This selects which of the two autopilots is in use, A or B. The A autopilot gets its information from the pilot’s side. The B from the co-pilot. If you select the B autopilot when the A is engaged, the A autopilot will disengage (and vice-versa).

What this means is that the pilot’s autopilot does not see the co-pilot’s airspeed. And the co-pilot’s autopilot does not see the pilot’s airspeed. Or any of the other information-givers, such as angle of attack.

Once again, the human pilots must act as the 737s corpus callosum.

The fossil record

JFK was president when the 737s DNA, its mechanical, electronic and physical architecture, were cast in amber. And that casting locked into the airplane’s fossil record two immutable objects. One, the airplane sat close, really close, to the ground (to make loading passengers and luggage easier at unimproved airports). And, two, the divided and alienated nature of its bicameral automation bureaucracy.

These were things that no amount of evolutionary development could change.

Rise of the machines

Of all the -wares (hardware, software, humanware) in a modern airplane the least reliable and thus the most liability-attracting is the humanware. Boeing, ironically, estimates that eighty-percent of all commercial airline accidents are due to so-called “pilot error.” I am sure that if Boeing’s communication department could go back in time, they’d like to revise that to 100%. With that in mind, it’s not hard to understand why virtually every economic force at work in the aviation industry has on its agenda at least one bullet-point addressed to getting rid of the human element. Airplane manufacturers, airlines, everyone would like as much as possible to get rid of the pilots up front. Not because pilots themselves cost much (their salaries are a miniscule portion of operating an airline) but because they attract so much liability.

The best way to get rid of the liability of pilot error is to simply get rid of the pilots.

Emergence of the machine bureaucracy

In aviation’s early days, there was no machine bureaucracy. Pilots were responsible for processing the information from the givers and turning that into commands for the takers. Stall warning (information giver) activated? Push the airplane’s nose down and increase power (commands to the information takers).

Soon, however, the utility of allowing machines to perform some of the pilot’s tasks became obvious. This was originally sold as a way to ease the pilot’s tactical workload, to free the pilots’ hands and minds so that they could better concentrate on strategic issues – such as the weather ahead.

It did not take long to realize that the equation was backward. The machines worked well when they were subservient to the pilots. But they would work even better when they were superior to the pilots.

And now there was a third class of machinery in the plane. The machine bureaucrat.

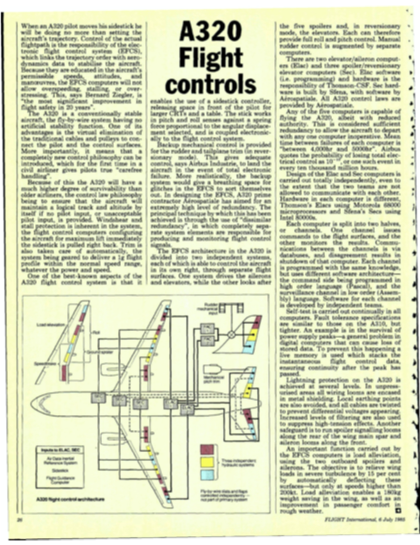

The Airbus consortium, long a leader in advancing the technological sophistication of aviation (they succeeded with Concorde where Boeing had utterly failed with their SST, for example), realized this. And in the late 1970s embarked on a program to create the first “fly by wire” aircraft, the A320.

In a “fly by wire” aircraft, software stands between man and machine. Specifically, the flight controls that pilots hold in their hands are no longer connected directly to the airplane’s information takers, such as the control surfaces and engines. Instead the flight controls become yet another set of information-givers.

Automation done right

When Airbus floated the idea of a “fly-by-wire” aircraft, the A320, it knew it had to do it right. It was a pioneer in a technology that would need to prove itself to a skeptical industry, not to mention public. It had to build trust that it could make something that was safe.

And that the way to do it right was with a maximum amount of empathy. Toward that end Airbus was extremely transparent about exactly how the A320’s automation would work. They told the public (this was in the mid 1980s) that the automation system would employ an army of redundant sensors, like the kind implicated in the MCAS crash.

They told the public that the system would employ an army of redundant computers, each able to take over the tasks of any computer gone rogue, or down. And they told the public that the system would use an army of disparate human groups. The system’s design was laid down on paper and disparate groups from disparate companies had to implement identical solutions to the same designs.

Everybody worked in an atmosphere of total transparency.

That way if any of the implementing companies suffered from an inadequacy of empathy, if any of them tried to cut a corner or didn’t understand what their jobs were, it would be countered by the results from the companies that did.

And what they produced were a set of machine bureaucrats. Taking information from the machine eyes and ears – airspeed, angle of attack, altitude. And as well as the pilots, both human and automatic.

And they evaluate that information – its quality, its reliability and its probity on an equal level. Which means with an equal amount of skepticism.

The human pilots were demoted out of the bureaucracy and into the role of information-givers. Alongside things like the airspeed sensors, the angle of attack sensors, and all the other sensors the pilots were only there to serve as additional eyes and ears for the machines.

Next step, bathroom monitor.

The airlines saw the writing on the wall and were delighted. Boeing, caught with its pants around its ankles, embarked on a huge anti-automation campaign – even as it struggled to adapt other aspects of the A320 technology, such as its huge CFM56 engines, to Boeing’s already-old 737 airframe.

Boeing’s strategy worked well for quite some time. By retrofitting the A320s engines to the 737, they were able to match the A320s fuel economics. And with a vigorous anti-automation campaign, aided by pilots’ unions and a public fearful of machine control, kept the 737 sales rolling.

It all comes down to money

Wall Street loves technology that involves little or no capital investment. Think Uber. And why it hates old-line manufacturing with its expensive factories and machinery.

And people. Which is what Boeing’s managers – its board of directors – understood when they embarked on an ambitious program to re-make the company. Re-make the company away from its old-line industrial roots, which Wall Street abhorred, and more like something along the lines of an Apple Computer.

The liquidation of capital

There were a lot of components to that transformation. Including firing all the old-line engineers in unionized Washington State. And replacing them with a cadre of unskilled workers, such as those putting together the 787 “Dreamliner” in antebellum South Carolina.

“Quit behaving like a family and become more like a team” – Boeing CEO Harry Stonecipher, 1998

Putting together, not making, because another relentless part of the transformation was liquidation of Boeing’s capital plant. Like another once-giant aviation company, Curtiss Wright, Boeing’s managers had drunk the Wall Street koolaid and believed, with no empirical evidence, that the best way of making money by making things was not to make things at all.

Think of it as aviation’s version of Mel Brook’s black comedy The Producers (The Producers was about making money, by losing money).

A key Wall Street shibboleth along those lines being something known as “Return on Net Assets,” or RONA. RONA says “Making something with something is expensive. So make something, but make it with nothing.”

Think of it as “Springtime for Hitler” (“Springtime for Hitler” was the title of a show within The Producers assumed to be so awful that it was guaranteed to lose money, and thus make money).

Springtime for Hitler, in the Wall Street world, means that US manufacturing companies stop making anything themselves. Instead everything they make, they get other people to make for them. Using exploited labor, producing inferior product greased on the wheels of distrust, fear and an utter lack of shared mission or shared sacrifice.

That means, for example, that the 787s being assembled in South Carolina are being put together by people whose last job was working the fry pit at the local burger joint using parts made by equally, and intentionally, marginalized people from half a world away.

What could go wrong?

Well, Boeing itself only makes about ten percent of each 737. The rest, ninety percent, is made by its subcontractors and suppliers.

That is a situation in which Boeing is extremely exposed to any breakdown in empathy between itself and its suppliers, yet that risk is not factored into its stock price by Wall Street.

That’s what could go wrong.

Well, Boeing itself only makes about ten percent of each 737. The rest, ninety percent, is made by its subcontractors and suppliers.

That is a situation in which Boeing is extremely exposed to any breakdown in empathy between itself and its suppliers, yet that risk is not factored into its stock price by Wall Street.

That’s what could go wrong.

"[If Boeing CEO Condit] can use the window of opportunity opened by the layoffs, Boeing could be transformed into a global enterprise that's much less dependent on the U.S. for both brawn and brains". BusinessWeek, 2002

Software is killing us

As a forty-year veteran of the software development industry and a person responsible for directing teams that generated millions of lines of computer code, I will tell you something wonderful about the industry.

Anything you can do by building hardware – by casting metal, sawing wood, tightening fasteners or running hoses – you can do faster, cheaper, and with less organizational heartache with software. And you can do it with far fewer prying eyes, scrutiny or oversight.

And the icing on the cake: if you screw it up, you can pass along (“externalize,” as the economists say) the costs of your mistakes to your customers. Or in this case, the flying public.

The Rockwell-Collins EDFCS-730

As mentioned earlier in this article, the original 737 autopilots were a collection of electromechanical controls made out of metal, hydraulic fluid, relays, etc. And there were two of them, each connected to either the pilot’s or co-pilot’s information-givers.

Over time more and more of the electromechanical functions of the autopilots were replaced/and-or supplanted by digital components. In the most current autopilot, called the EDFCS-730, that supplanting is total. The EDFCS-730 is “fully digital” – meaning no more metal, hydraulic fluid, etc. Just a computer, through and through.

And, just like the original autopilots, there are only two EDFCS-730s (the A320neo has the equivalent of five and they are far more comprehensive). And each one can only see the information givers on their respective sides (all of the computers in the A320neo can see all of the givers). Remember, that architecture was cast in amber.

The EDFCS-730 is “Patient Zero” of the MCAS story. It offered Boeing an enormous set of opportunities. First, it was far cheaper on a lifecycle basis than the old units it replaced. Second, it was trivial to re-configure the autopilot when new functionality was needed – such as a new model of 737.

Third, its operating laws were embedded in software – not hardware. That meant that changes could be made quickly, cheaply and with little or no oversight or scrutiny. One of the aspects of software development in aviation is that there are far fewer standards, practices, or requirements for making software in aviation than there are for making hardware.

A function that would draw an army of auditors, regulators and overseers in hardware gets by with virtually no oversight if done in software.

There is a USB port in the 737 cockpit for updating the EDFCS-730 software. Want to update it with new software? You need nothing more than a USB keystick.

It’s not hard to see why software has become an unbelievably attractive “manufacturing” option.

Longitudinal stability

Late in the 737 MAX’s development, after actual test flying began, it became apparent that there was a problem with the airframe’s longitudinal stability. We do not know how bad is that problem nor do we know its exact nature. But we know it exists because if it didn’t, Boeing would not have felt the need to implement MCAS.

MCAS is implemented (“lives in”) the EDFCS-730. It pushes the 737 MAX’s nose down when the system believes that the airplane’s angle of attack is too high. For more on that process, see https://spectrum.ieee.org/aerospace/aviation/how-the-boeing-737-max-disaster-looks-to-a-software-developer

I believe that Boeing anticipated the longitudinal stability issue arising from the MAX’s larger engines and their placement. In the late 1970s Boeing had encountered something similar when fitting the CFM56 engines to the 737 “Classic” series. There it countered the issue with a set of aerodynamic tweaks to the airframe, including large strakes affixed to the engine cowls which are readily visible to any passenger sitting in a window seat over or just in front of the wing.

When the issue of longitudinal stability arising from engine size and placement arose again with the larger-still CFM LEAP engines on the 737 MAX, Boeing had a tool at its disposal that it had not had with previous generations of 737.

And that tool was the EDFCS-730 autopilot.

Boeing had a choice: correct the stability problems in the traditional manner (meaning expensive changes to the airframe) or quickly shove some more software, by shoving a USB keystick, into the EDFCS-730 to make the problem go away.

One little problem…

In the olden days all of the sensors outside the plane that gathered “air data” were connected directly to their respective cockpit instruments. The pitot tubes had plumbing connecting them to the air speed instruments, the static ports connected directly to the vertical speed and altimeter instruments, etc.

This turned into a bit of a plumbing nightmare as well as made it difficult to share that data with a larger number of instruments and devices. The solution was a box called an Air Data Inertial Reference Unit (“ADIRU” in the diagram, below), a kind of Grand Central Station of air data. The “IRU” part of it refers to the plane’s inertial reference platform, which provides the plane’s location and attitude (pointed up, down, sideways, etc.)

ADIRUs were not common on commercial airliners (I can think of none, actually) when the 737 was first certified and, indeed, the first 737s did not have any. But as newer models emerged and the technology became commonplace, the 737 gained ADIRUs as well. However, it gained them in a way that did not fundamentally change the bicameral nature of its information givers.

The A320 never had the same bicameral architecture. The A320 was born with ADIRUs and it has not two but three (see the “two watches” problem, above). In the A320 all three ADIRUs are available to all five Flight Control Computers (FCCs), all the time – making it relatively easy for any of the FCCs to read from any of the three ADIRUs and determine if one of the ADIRUs is not telling the truth.

One more small note, before we go on. The industry uses the term “Flight Control Computer,” (FCC). On all aircraft that I can think of, the FCCs are the computers used to intermediate between the pilot’s controls and the airplane’s control surfaces. They are where the software that stands between man and machine “lives.”

Airbus, for example, makes an express differentiation between the FCCs and the autopilot computers. They are two separate functions.

Boeing chooses to call the autopilot computers (EDFCS-730s) “Flight Control Computers.” In the 737, which is not a fly by wire aircraft, there is no differentiation between FCC and autopilot. They are one and the same.

I suspect this has more to do with marketing than anything but readers should take away from it one important thing: in a real automation architecture flight control functions and autopilot functions are distinct. In the 737 MAX Boeing has attempted to gain the marketing advantage of having a “Flight Control Computer” architecture without having to do the real work required of implementing a robust FCC architecture. Instead, they are cramming more and more automation functions into boxes never designed for such: the autopilot boxes.

The result is Frankenplane.

Late in the 737 MAX’s development, after actual test flying began, it became apparent that there was a problem with the airframe’s longitudinal stability. We do not know how bad is that problem nor do we know its exact nature. But we know it exists because if it didn’t, Boeing would not have felt the need to implement MCAS.

MCAS is implemented (“lives in”) the EDFCS-730. It pushes the 737 MAX’s nose down when the system believes that the airplane’s angle of attack is too high. For more on that process, see https://spectrum.ieee.org/aerospace/aviation/how-the-boeing-737-max-disaster-looks-to-a-software-developer

I believe that Boeing anticipated the longitudinal stability issue arising from the MAX’s larger engines and their placement. In the late 1970s Boeing had encountered something similar when fitting the CFM56 engines to the 737 “Classic” series. There it countered the issue with a set of aerodynamic tweaks to the airframe, including large strakes affixed to the engine cowls which are readily visible to any passenger sitting in a window seat over or just in front of the wing.

When the issue of longitudinal stability arising from engine size and placement arose again with the larger-still CFM LEAP engines on the 737 MAX, Boeing had a tool at its disposal that it had not had with previous generations of 737.

And that tool was the EDFCS-730 autopilot.

Boeing had a choice: correct the stability problems in the traditional manner (meaning expensive changes to the airframe) or quickly shove some more software, by shoving a USB keystick, into the EDFCS-730 to make the problem go away.

One little problem…

In the olden days all of the sensors outside the plane that gathered “air data” were connected directly to their respective cockpit instruments. The pitot tubes had plumbing connecting them to the air speed instruments, the static ports connected directly to the vertical speed and altimeter instruments, etc.

This turned into a bit of a plumbing nightmare as well as made it difficult to share that data with a larger number of instruments and devices. The solution was a box called an Air Data Inertial Reference Unit (“ADIRU” in the diagram, below), a kind of Grand Central Station of air data. The “IRU” part of it refers to the plane’s inertial reference platform, which provides the plane’s location and attitude (pointed up, down, sideways, etc.)

ADIRUs were not common on commercial airliners (I can think of none, actually) when the 737 was first certified and, indeed, the first 737s did not have any. But as newer models emerged and the technology became commonplace, the 737 gained ADIRUs as well. However, it gained them in a way that did not fundamentally change the bicameral nature of its information givers.

The A320 never had the same bicameral architecture. The A320 was born with ADIRUs and it has not two but three (see the “two watches” problem, above). In the A320 all three ADIRUs are available to all five Flight Control Computers (FCCs), all the time – making it relatively easy for any of the FCCs to read from any of the three ADIRUs and determine if one of the ADIRUs is not telling the truth.

One more small note, before we go on. The industry uses the term “Flight Control Computer,” (FCC). On all aircraft that I can think of, the FCCs are the computers used to intermediate between the pilot’s controls and the airplane’s control surfaces. They are where the software that stands between man and machine “lives.”

Airbus, for example, makes an express differentiation between the FCCs and the autopilot computers. They are two separate functions.

Boeing chooses to call the autopilot computers (EDFCS-730s) “Flight Control Computers.” In the 737, which is not a fly by wire aircraft, there is no differentiation between FCC and autopilot. They are one and the same.

I suspect this has more to do with marketing than anything but readers should take away from it one important thing: in a real automation architecture flight control functions and autopilot functions are distinct. In the 737 MAX Boeing has attempted to gain the marketing advantage of having a “Flight Control Computer” architecture without having to do the real work required of implementing a robust FCC architecture. Instead, they are cramming more and more automation functions into boxes never designed for such: the autopilot boxes.

The result is Frankenplane.

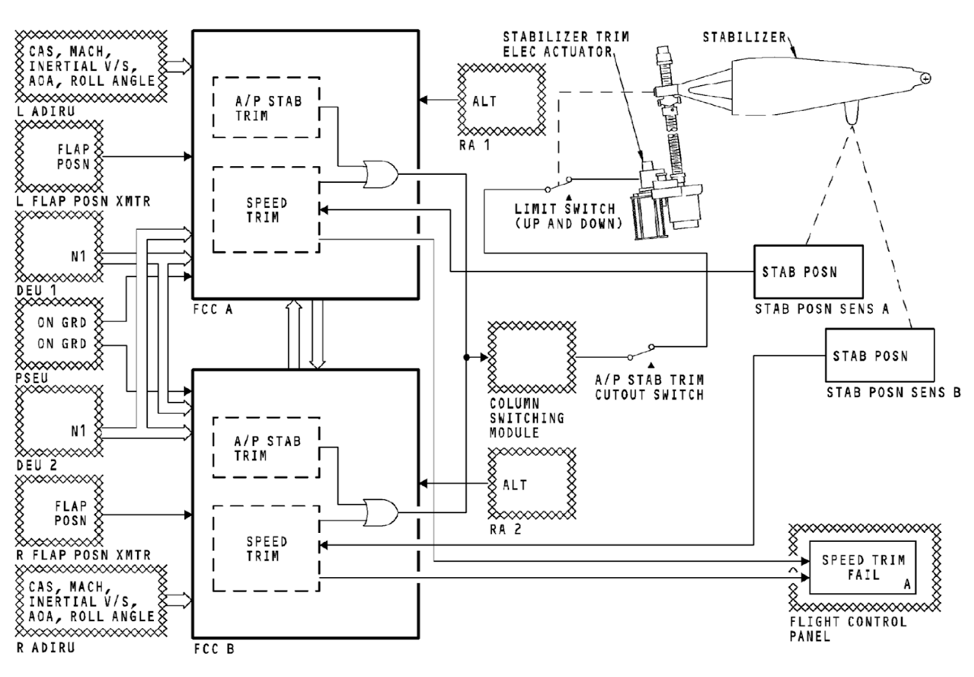

Above is a diagram of the automation architecture of the 737 NG and 737 MAX. The two components labelled “FCC A” (Flight Control Computer) and “FCC B” are the EDFCS-730s.

Two things stand out in the diagram:

At this point my readers may be justifiably angry with me. After all, I’ve been going on and on about the bicameral nature of the 737 architecture and the lack of an electronic corpus callosum between the two “flight control computers” (autopilots). Yet it is clearly there in the diagram, above. I feel your pain.

In my defense, I ask that you remember one thing: we know that the initial MCAS implementation did not use both of the 737s angle of attack sensors. Despite the link between the FCCs that should have allowed it to do so. It should have been relatively easy for the FCC in charge of a given flight, running MCAS, to ask the FCC not in charge to pass along the AOA information over the link in the diagram.

Why did they not do this? Forensically, I can think of two possible reasons:

I tend to tilt towards #1. I think it’s just really, really hard to do so and I think that the “boot up” problems (see end of this article) point to exactly that. If so, that is yet another damning reason why the FAA and no one should ever certify as safe MCAS as a solution to the aircraft’s longitudinal stability problem.

That said, it’s never “either/or.” The answer could be “both #1 and #2”

Automation done wrong

I have spoken to individuals at all of the companies involved and have yet to find anyone at Rockwell Collins (now Collins Aerospace) who can direct me to the individuals tasked with implementing the MCAS software. Collins is, predictably, extremely reluctant to take ownership of either the EDFCS-730 or its software and has predictably kept its mouth very shut for over a year.

I have been assured repeatedly that the internal controls within Collins would never have allowed software of such low quality to go out the door and that none of their other autopilot products share much, if any commonality, with the EDFCS-730 (which exist for and only for the 737 NG and 737 MAX aircraft).

That, together with off the record communications, leads me to believe that Boeing itself is responsible for the EDFCS-730 software. Most important, for the MCAS component. The responsibility for creating MCAS appears to have been farmed out to a low-level developer with little or no knowledge of larger issues regarding aviation software development, redundancy, information takers, information givers, or machine bureaucracy.

And I believe this is deliberate. Because a more experienced developer, of the kind shown the door by the thousands in the early 2000s, would have immediately raised concerns about the appropriateness of using the EDFCS-730, a glorified autopilot, for the MCAS function – a flight control function.

They would have immediately understood that the lack of a robust electronic corpus callosum between the left and right autopilots made impossible the use of both angle of attack sensors in MCAS’ automatic deliberations.

They would have pointed out that the software needed to realize that an angle of attack that goes from the low teens to over seventy degrees, in an instant, is structurally and aerodynamically impossible.

And not to point the nose at the ground when it does. Because the data, not the airplane, is wrong.

And if they had, the families and friends of nearly four hundred dead would be spared their bottomless grief.

Instead, Wall Street’s empathy discount sealed their fate.

Quick, dirty and deadly

The result is, as they say, history. Wall Street had stripped Boeing of a leadership cadre of any intrinsic business acumen. And its leadership had no skills beyond extraordinary skills of intimidation through a mechanism of implied and explicit threats.

Empathy has no purchase in such an environment. The collapse of trust relationships between individuals within the company and, more important, between the company and its suppliers fertilized the catastrophe that now engulfs the enterprise.

From high in the company came a dictat: ship the airplane. Without empathy, there was no ability to hear cautions about the method chosen by which to ship (low-quality software).

The pathology of Boeing’s demise

Much has already been written about the effect of McDonnell Douglas’ takeover of Boeing. John Newhouse’s Boeing vs. Airbus is the definitive text in the matter with L.J. Hart-Smith’s “Out-sourced profits- The cornerstone of successful subcontracting” being the devastating academic adjunct.

Recently Marshall Auerback and Maureen Tkacik have covered the subject comprehensively, leaving no doubt about our society’s predilection for rewarding elite incompetence handsomely.

Alec MacGillis’ “The Case Against Boeing” ( https://www.newyorker.com/magazine/2019/11/18/the-case-against-boeing ) lays out the human cost of Wall Street’s murderous rampage in a manner that should leave claw marks on the chair of anyone reading it.

Charles Pezeshki’s “More Boeing Blues” ( https://empathy.guru/2016/05/22/more-boeing-blues-or-whats-the-long-game-of-moving-the-bosses-away-from-the-people/ ) is arresting in its prescience.

Boeing’s PR machine has repeatedly lied about the origin and nature of MCAS. It has tried to imply that 737 MCAS is just a derivation of the MCAS system in the KC-46. It is not.

It has tried to blame the delays in re-certification on everything from “cosmic rays” (a problem the rest of the industry solved when Eisenhower was president) to increased diligence (up is the only direction from zero). Most of the press has bought this nonsense, hook line and sinker.

More nauseatingly, it promotes what I will call the “brown pilot theory.” Namely, that it is pilot skill, not Wall Street malevolence, that is responsible for the dead. In service of that theory it has enlisted aviation luminary (and a personal hero-no-more of mine) William Langewiesche.

For the best response to that, please see Elan Head’s “The limits of William Langewiesche’s ‘airmanship’” ( https://medium.com/@elanhead/the-limits-of-william-langewiesches-airmanship-52546f20ec9a )

Those individuals “get it.” Missing here are accurate pontifications from much of the aviation press, the aviation consultancies or financial advisory firms. All of whom have presented to the public a collective face of “this is interesting, and newsworthy, but soon the status quo will be restored.”

A well, poisoned

Boeing’s oft-issued eager and anticipatory restatements of 737 MAX recertification together with its utter failure to actually recertify the aircraft invite questions as to what is actually going on. It is now over a year since the first crash and coming up on the anniversary of the second.

Yet time stands still.

What was obvious, months ago, was that the software comprising MCAS was developed in a state of corporate panic and hurry. More important, it was developed with no oversight and no direction other than to produce it, get it out the door, and make the longitudinal problem go away as quickly, cheaply, and silently as a software solution would allow.

What became clear to me, subsequently, was that all of the software in the EDFCS-730 was similarly developed. And when the disinfectant of sunlight shined on the entire EDFCS-730 software, going back decades, that – as my late wife’s father would say – the entertainment value would be “zero.”

The FAA was caught with its hand in the cookie jar. The FAA’s loathsome Ali Bahrami, nominally in charge of aviation safety, looked the other way as Boeing fielded change after deadly change to the 737 with nary a twitter from the agency whose one job was to protect the public. In the hope that a door revolving picks all for its bounty.

Collapse

Recent headlines speak in vague terms about Boeing’s inability to get the two autopilots communicating on “boot up.” Forensically, what that means is that Boeing has made an attempt to create a functional electronic corpus collosum between the two, so that the one in charge can access the sensors of the one not in charge (see “One little problem…,” above).

And it has failed in that attempt.

Which, if you understand where Boeing the company is now, is not at all surprising. Not surprising, either, is Boeing’s recent revelation that re-certification of the 737 MAX is pushed back to “mid-year” 2020. Applying a healthy function to Boeing’s public relations prognostications that is accurately translated as “never.”

For it was never realistic to believe that a blindered, incompetent, empathy-desert like Boeing, which had killed nearly four hundred already, was able to learn from, much less fix, its mistakes.

Two things stand out in the diagram:

- There appears to be a link (a “corpus callosum”) between FCC A and FCC B (vertical arrows between them)

- The left Angle of Attack (AOA) sensor (contained in “L ADIRU”) is connected to FCC A & the right Angle of Attack (AOA) sensor (contained in “R ADIRU”) is connected to FCC B

At this point my readers may be justifiably angry with me. After all, I’ve been going on and on about the bicameral nature of the 737 architecture and the lack of an electronic corpus callosum between the two “flight control computers” (autopilots). Yet it is clearly there in the diagram, above. I feel your pain.

In my defense, I ask that you remember one thing: we know that the initial MCAS implementation did not use both of the 737s angle of attack sensors. Despite the link between the FCCs that should have allowed it to do so. It should have been relatively easy for the FCC in charge of a given flight, running MCAS, to ask the FCC not in charge to pass along the AOA information over the link in the diagram.

Why did they not do this? Forensically, I can think of two possible reasons:

- It was just “too hard.” The software in the EDFCS-730 is too brittle and crufty (these are software technical terms, believe me), there is something about the nature of the link (it’s a 150 baud serial link, for example (note for the pedants, I am not saying it is)), you have to “wake up” the standby FCC, etc.

- Boeing deliberately did not want to use both AOA sensors because, as I said at the beginning, “a man with one AOA sensor knows what the angle of attack is, a man with two AOA sensors is never sure.” I.e. if Boeing used two sensors then it would have had to deal with the problem of what to do if they disagreed. And that would have meant training which would have violated ship the airplane.

I tend to tilt towards #1. I think it’s just really, really hard to do so and I think that the “boot up” problems (see end of this article) point to exactly that. If so, that is yet another damning reason why the FAA and no one should ever certify as safe MCAS as a solution to the aircraft’s longitudinal stability problem.

That said, it’s never “either/or.” The answer could be “both #1 and #2”

Automation done wrong

I have spoken to individuals at all of the companies involved and have yet to find anyone at Rockwell Collins (now Collins Aerospace) who can direct me to the individuals tasked with implementing the MCAS software. Collins is, predictably, extremely reluctant to take ownership of either the EDFCS-730 or its software and has predictably kept its mouth very shut for over a year.

I have been assured repeatedly that the internal controls within Collins would never have allowed software of such low quality to go out the door and that none of their other autopilot products share much, if any commonality, with the EDFCS-730 (which exist for and only for the 737 NG and 737 MAX aircraft).

That, together with off the record communications, leads me to believe that Boeing itself is responsible for the EDFCS-730 software. Most important, for the MCAS component. The responsibility for creating MCAS appears to have been farmed out to a low-level developer with little or no knowledge of larger issues regarding aviation software development, redundancy, information takers, information givers, or machine bureaucracy.

And I believe this is deliberate. Because a more experienced developer, of the kind shown the door by the thousands in the early 2000s, would have immediately raised concerns about the appropriateness of using the EDFCS-730, a glorified autopilot, for the MCAS function – a flight control function.

They would have immediately understood that the lack of a robust electronic corpus callosum between the left and right autopilots made impossible the use of both angle of attack sensors in MCAS’ automatic deliberations.

They would have pointed out that the software needed to realize that an angle of attack that goes from the low teens to over seventy degrees, in an instant, is structurally and aerodynamically impossible.

And not to point the nose at the ground when it does. Because the data, not the airplane, is wrong.

And if they had, the families and friends of nearly four hundred dead would be spared their bottomless grief.

Instead, Wall Street’s empathy discount sealed their fate.

Quick, dirty and deadly

The result is, as they say, history. Wall Street had stripped Boeing of a leadership cadre of any intrinsic business acumen. And its leadership had no skills beyond extraordinary skills of intimidation through a mechanism of implied and explicit threats.

Empathy has no purchase in such an environment. The collapse of trust relationships between individuals within the company and, more important, between the company and its suppliers fertilized the catastrophe that now engulfs the enterprise.

From high in the company came a dictat: ship the airplane. Without empathy, there was no ability to hear cautions about the method chosen by which to ship (low-quality software).

The pathology of Boeing’s demise

Much has already been written about the effect of McDonnell Douglas’ takeover of Boeing. John Newhouse’s Boeing vs. Airbus is the definitive text in the matter with L.J. Hart-Smith’s “Out-sourced profits- The cornerstone of successful subcontracting” being the devastating academic adjunct.

Recently Marshall Auerback and Maureen Tkacik have covered the subject comprehensively, leaving no doubt about our society’s predilection for rewarding elite incompetence handsomely.

Alec MacGillis’ “The Case Against Boeing” ( https://www.newyorker.com/magazine/2019/11/18/the-case-against-boeing ) lays out the human cost of Wall Street’s murderous rampage in a manner that should leave claw marks on the chair of anyone reading it.

Charles Pezeshki’s “More Boeing Blues” ( https://empathy.guru/2016/05/22/more-boeing-blues-or-whats-the-long-game-of-moving-the-bosses-away-from-the-people/ ) is arresting in its prescience.

Boeing’s PR machine has repeatedly lied about the origin and nature of MCAS. It has tried to imply that 737 MCAS is just a derivation of the MCAS system in the KC-46. It is not.

It has tried to blame the delays in re-certification on everything from “cosmic rays” (a problem the rest of the industry solved when Eisenhower was president) to increased diligence (up is the only direction from zero). Most of the press has bought this nonsense, hook line and sinker.

More nauseatingly, it promotes what I will call the “brown pilot theory.” Namely, that it is pilot skill, not Wall Street malevolence, that is responsible for the dead. In service of that theory it has enlisted aviation luminary (and a personal hero-no-more of mine) William Langewiesche.

For the best response to that, please see Elan Head’s “The limits of William Langewiesche’s ‘airmanship’” ( https://medium.com/@elanhead/the-limits-of-william-langewiesches-airmanship-52546f20ec9a )

Those individuals “get it.” Missing here are accurate pontifications from much of the aviation press, the aviation consultancies or financial advisory firms. All of whom have presented to the public a collective face of “this is interesting, and newsworthy, but soon the status quo will be restored.”

A well, poisoned

Boeing’s oft-issued eager and anticipatory restatements of 737 MAX recertification together with its utter failure to actually recertify the aircraft invite questions as to what is actually going on. It is now over a year since the first crash and coming up on the anniversary of the second.

Yet time stands still.

What was obvious, months ago, was that the software comprising MCAS was developed in a state of corporate panic and hurry. More important, it was developed with no oversight and no direction other than to produce it, get it out the door, and make the longitudinal problem go away as quickly, cheaply, and silently as a software solution would allow.

What became clear to me, subsequently, was that all of the software in the EDFCS-730 was similarly developed. And when the disinfectant of sunlight shined on the entire EDFCS-730 software, going back decades, that – as my late wife’s father would say – the entertainment value would be “zero.”

The FAA was caught with its hand in the cookie jar. The FAA’s loathsome Ali Bahrami, nominally in charge of aviation safety, looked the other way as Boeing fielded change after deadly change to the 737 with nary a twitter from the agency whose one job was to protect the public. In the hope that a door revolving picks all for its bounty.

Collapse

Recent headlines speak in vague terms about Boeing’s inability to get the two autopilots communicating on “boot up.” Forensically, what that means is that Boeing has made an attempt to create a functional electronic corpus collosum between the two, so that the one in charge can access the sensors of the one not in charge (see “One little problem…,” above).

And it has failed in that attempt.

Which, if you understand where Boeing the company is now, is not at all surprising. Not surprising, either, is Boeing’s recent revelation that re-certification of the 737 MAX is pushed back to “mid-year” 2020. Applying a healthy function to Boeing’s public relations prognostications that is accurately translated as “never.”

For it was never realistic to believe that a blindered, incompetent, empathy-desert like Boeing, which had killed nearly four hundred already, was able to learn from, much less fix, its mistakes.

This was driven infuriatingly home with today’s quotes from new-CEO David Calhoun. As the Seattle Times reported, Calhoun’s position is:

“I don’t think culture contributed to that miss,” he said. Calhoun said he has spoken directly to the engineers who designed MCAS and that “they thought they were doing exactly the right thing, based on the experience they’ve had.”"

This is as impossible as it is Orwellian. It shows that Boeing’s leadership is unwilling (and probably unaware) of what the root issues are. MCAS not just bad engineering.

“I don’t think culture contributed to that miss,” he said. Calhoun said he has spoken directly to the engineers who designed MCAS and that “they thought they were doing exactly the right thing, based on the experience they’ve had.”"

This is as impossible as it is Orwellian. It shows that Boeing’s leadership is unwilling (and probably unaware) of what the root issues are. MCAS not just bad engineering.

“When people say I changed the culture of Boeing, that was the intent, so it’s run like a business rather than a great engineering firm” – Boeing CEO Harry Stonecipher, 2004

It was the inevitable result of the cutting of the sinews of empathy, sinews necessary for any corporation to stand on its own two feet. Boeing is not capable of standing any more and Calhoun’s statements are the proof.